A few years back, I found a simple trick in tandem with SIMD intrinsics to truly unleash the power of contemporary CPUs.

I put the strstr of LibC and the std::search of the C++ Standard Templates Library to the test and hit a throughput of around 1.5 GB/s for substring search on a solitary core.

Not too shabby, right?

But imagine, that the memory bandwidth could theoretically reach a striking 10-15 GB/s per core.

Going on this wild hypothesis that if the first 4 characters of a string are a match, it’s highly probable that the rest will match too, I brought this idea to life for x86 SSE, AVX, AVX-512, and Arm Neon. The result? I achieved memory bandwidth that outdid the standard by a whopping 5-10x factor. But alas, it remained a demo, with no practical applications… until recently.

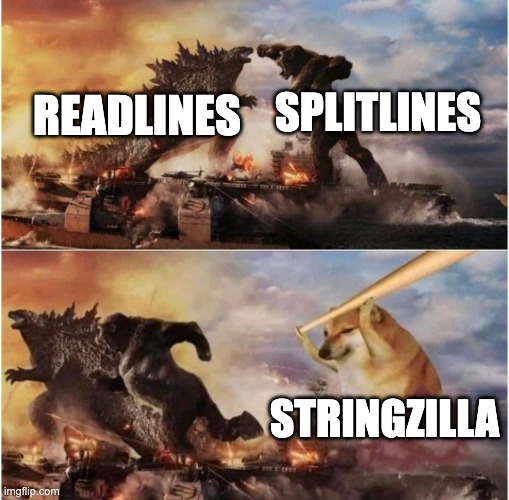

Meet StringZilla 🦖

I found myself knee-deep in a demo project for USearch, UStore, and UForm, which required parsing of a multi-terabyte collection of newline-delimited files.

Being a frequent Python user, I, naively, attempted open(...).readlines()...

A billion lines were simply too overwhelming for Python to handle. So, I went back to my demo code, breathed new life into it, and reshaped it into a Python library. And thus, StringZilla was born!

Str and File mimic the interface of a str, offering functions like contains, find, count, splitlines, and split, in addition to other operator overloads.

- Native: 1.5 GB/s.

- Heuristic: 4 GB/s.

- Heuristic with Arm NEON: 16 GB/s.

- Heuristic with x86 AVX: 13 GB/s.

Even with its minimalistic implementation that doesn’t even bother to dodge misaligned loads, StringZilla holds its ground.

The NEON version runs through 16 possible offsets simultaneously, employing SIMD to verify if a match is present and switching to scalar code if has_match == true for a particular block.

Here’s a sneak peek at the hot path:

| |

Interestingly, the same concept can be effortlessly extended to cater to needles under 4 characters long… in more ways than one. You can create standalone methods:

- For one-character needles, you would only require

matches0. - For two-character needles,

matches0andmatches1would suffice. - For three-character needles, you’d need

matches0,matches1, andmatches2.

Despite a more straightforward but slower solution, I opted to keep the code brief. You might consider developing special functions for counting the number of times a single character appears in the string…

| |

While an impressive exercise, the effectiveness of implementing it may be questionable if we’re already operating with a fast scalar variant.

Pseudo-SIMD

SIMD is an exceptional asset when the goal is optimal performance, assuming your hardware is known. The complexity arises when the software is distributed across millions of different devices globally. Balancing performance and adaptability, we may compile the same function multiple times with varying configurations, bundling them in a single binary and executing dynamic dispatch at the initiation stage.

However, dynamic dispatch is far from simple.

GCC provides the __builtin_cpu_supports function, but it’s limited to x86 flags.

On Linux, getauxval allows checking of hardware abilities, but it’s exclusively available for Arm.

This identical issue occurs in USearch and in all of my projects, from time to time compelling me to resort to JIT…

What if there was a more straightforward way? The Arm NEON registers are only 128 bits long. How much worse would the performance get if we use 64-bit general-purpose registers and emulate SIMD instructions with simple bitshifts and lookups? The answer - not much!

| |

Let’s start with the simple case when the needle is only a single character long.

The n is the “needle”, and ctz is short for “count trailing zeros”.

This would work on almost every modern CPU, and it’s easy to extend for 2, 3, and 4-character needles.

Adding boilerplate code to choose the suitable backend and match the misaligned head and tail, you can expect the following performance on the Apple M2 Pro CPU:

| Needle Length | STL | StringZilla | Backend |

|---|---|---|---|

| 1 | 3.4 GB/s | 12.25 GB/s | strzl_naive_find_char |

| 2 | 3.4 GB/s | 6.4 GB/s | strzl_naive_find_2chars |

| 3 | 3.4 GB/s | 4.1 GB/s | strzl_naive_find_3chars |

| 4 | 3.4 GB/s | 3.4 GB/s | strzl_naive_find_4chars |

| 5 | 1.7 GB/s | 3.1 GB/s | strzl_naive_find_substr |

This may not be that impressive after the previous chapter, but this doesn’t need any specialized hardware and can easily be integrated into WebAssembly and other runtimes.

Sorting

Aside from “search”, other common operations on strings include sorting, grouping, and partitioning.

For those, StringZilla brings Strs to replace the list[str].

The core idea is the same. Let’s Radix Sort based on the first 4 characters and finalize the rest with Quick Sort or any other standard algorithm. In C, the code is more mouthful.

| |

Sadly, we need more boilerplate to make it run on Windows and MacOS, as qsort_r isn’t broadly supported.

And when supported, the comparator callback signature is different:

Accounting for that nonsense, we can finally benchmark.

Taking the leipzig1M.txt and sorting 1 Million of its first whitespace-delimited tokens, we get:

std::sort: 139.82 milliseconds.strzl_sort: 26.43 milliseconds. 5x improvement.

For 10 Million tokens:

std::sort: 1'959.33 milliseconds.strzl_sort: 214.57 milliseconds. 9x improvement.

I have also implemented Merge Sort, Quick Sort, Insertion Sort, and a few other algorithm variations as part of an experiment. None of them performed well and were deprecated. It’s also worth noting, that this exact implementation only works for arrays with less than 4 Billion entries, but is easy to generalize further.

Applying on Large Datasets

This trick has already saved me a few thousand dollars in the last couple of weeks.

The most apparent scenario would be when I read a huge file and split its parts between processes (pages[p::n]).

| |

You probably have a few similar scenarios as well, so check out the repo and consider contributing 🤗 Possible next steps would be:

rfind,rsplit, and other reverse-order interfaces.- composing

Strsfrom Python strings. - stable in-place partitions and sorts (not so trivial without dynamic memory).

The last one is not so trivial, if you want it fast, in-place, and without using any dynamic memory. It probably deserves a separate article, but the next one is again about abusing USearch ↩️